Basics of Machine Learning Series

Introduction

Gradient descent is an algorithm which is used to reach an optimal solution iteratively using the gradient of the loss function or the cost function. In contrast, normal equation is a method that helps solve for the parameters analytically i.e. instead of reaching the solution iteratively, solution for the parameter \(\theta\) is reached at directly by solving the normal equation.

Intuition

Consider a one-dimensional equation for the cost function given by,

- Where \(\theta \in \mathbb{R} \)

According to calculus, one can find the minimum of this function by calculating the derivative and solving the equation by setting derivative equal to zero, i.e.

Similarly, extending (1) to multi-dimensional setup, the cost function is given by,

- Where

- \(\theta \in \mathbb{R}^{n+1} \)

- n is the number of features

- m is the number of training samples

And similar to (2), the minimum of (3) can be found by taking partial derivatives w.r.t. individual \(\theta_i \forall i \in (0, 1, 2, \cdots, n) \) and solving the equations by setting them to zero, i.e.

- where \(i \in (0, 1, 2, \cdots, n)\)

Through derivation one can find that \(\theta\) is given by,

Feature scaling is not necessary for the normal equation method. Reason being, the feature scaling was implemented to prevent any skewness in the contour plot of the cost function which affects the gradient descent but the analytical solution using normal equation does not suffer from the same drawback.

Comparison between Gradient Descent and Normal Equation

Given m training examples, and n features

| Gradient Descent | Normal Equation |

|---|---|

| Proper choice of \(\alpha\) is important | \(\alpha\) is not needed |

| Iterative Method | Direct Solution |

| Works well with large n. Complexity of algorithm is O(\(kn^2\)) | Slow for large n. Need to compute \((X^TX)^{-1}\). Generally the cost for computing the inverse is O(\(n^3\)) |

Generally if the number of features is less than 10000, one can use normal equation to get the solution beyond which the order of growth of the algorithm will make the computation very slow.

Non-invertibility

Matrices that do not have an inverse are called singular or degenerate.

Reasons for non-invertibility:

- Linearly dependent features i.e. redundant features.

- Too many features i.e. \(m \leq n\), then reduce the number of features or use regularization.

Calculating psuedo-inverse instead of inverse can also solve the issue of non-invertibility.

Implementation

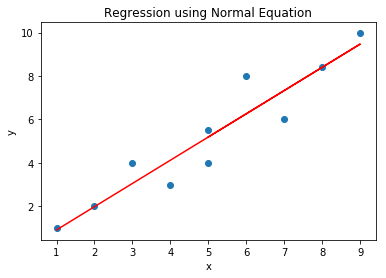

import matplotlib.pyplot as plt

import numpy as np

"""

Dummy Data for Linear Regression

"""

data = [(1, 1), (2, 2), (3, 4), (4, 3), (5, 5.5), (6, 8), (7, 6), (8, 8.4), (9, 10), (5, 4)]

"""

Matrix Operations

"""

inv = np.linalg.inv

mul = np.matmul

X = []

y = []

for x_i, y_i in data:

X.append([1, x_i])

y.append(y_i)

X = np.array(X)

y = np.atleast_2d(y).T

"""

Theta Calculation Using equation (5)

"""

theta = mul(mul(inv(mul(X.T, X)), X.T), y)

"""

Prediction of y using theta

"""

y_pred = np.matmul(X, theta)

"""

Plot Graph

"""

plt.scatter([i[0] for i in data], [i[1] for i in data])

plt.plot([i[0] for i in data], y_pred, 'r')

plt.title('Regression using Normal Equation')

plt.xlabel('x')

plt.ylabel('y')

plt.show()

Derivation of Normal Equation

Given the hypothesis,

- \(\theta = \begin{bmatrix} \theta_0 \\ \theta_1 \\ \vdots \\ \theta_n \end{bmatrix} \)

Let X be the design matrix wherein each row corresponds to the features in \(i^{th}\) sample of the m samples. Similarly, y is the vector with all the target values for all the m training samples. The cost function for the hypothesis (6) is given by (3). The cost function can be vectorized as follows for replacing the sigma operation with the sum over terms for matrix multiplication,

Since \(X\theta\) and \(y\) both are vectors, \((X\theta)^Ty = y^T(X\theta)\). So (7) can be further simplified as,

Taking partial derivative w.r.t \(\theta\) and equating to zero,

Let,

Taking partial derivatives,

Vectorizing (10),

Similarly, let,

Taking partial derivatives,

Vectorizing above equations,

Substitution (13) and (11) in (8),

If \(X^T X\) is invertible, then,

which is same as (5)

REFERENCES:

Machine Learning: Coursera - Normal Equation

Derivation of the Normal Equation for linear regression

Normal Equation and Matrix Calculus