Basics of Machine Learning Series

Pick a network architecture:

This usually means to pick the connectivity pattern between the neurons.

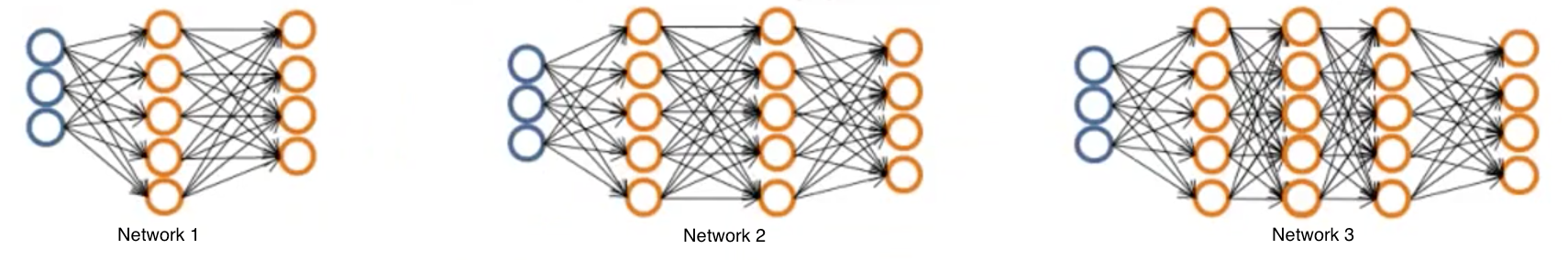

Fig.1 shows a few examples of network architectures. It can be seen that the three networks have the same number of input and output units. This is so because the input units equals input features in a given training example while the output units equals the number of target classes.

As a general rule, the when the number of classes are greater than 2, then the classes should be one hot encoded to ease the process of classification. It can be seen an defining individual logistic units for determining whether or not it belongs to that class.

As a default, the number of hidden layers can be kept as 1, or if the number of hidden layers is greater than 1, then the same number of hidden units in each layer. Also, the more the number of hidden units the better. One drawback it presents is in terms of computation expense. The number of units in a hidden layer is generally comparable or a little greater than the number of features (2, 3, or 4 times maybe).

Training a neural network:

- Random weight initialization

- Forward propagation to calculate \(h(x^{(i)})\)

- Calculate the cost, \(J(\Theta)\)

- Backpropagation to compute partial derivatives, \(\frac {\partial} {\partial \Theta_{jk}^{(l)}} J(\Theta)\)

- Use gradient checking to compare \(\frac {\partial} {\partial \Theta_{jk}^{(l)}} J(\Theta)\) given by backpropagation vs the numerical estimate of gradient. Then disable gradient checking.

- Use gradient descent or any other optimization algorithm to minimize \(J(\Theta)\).

For neural networks the cost function, \(J(\Theta)\) is non-convex and hence susceptible to local minima. But in practice it does not present a serious problem in the implementation.